-

Empresa

-

Blog da Banner

-

Explicação das especificações do sensor de medição a laser

Sensor Selection: Laser Sensor Specifications Explained

Manufacturers use many terms to describe sensor performance: accuracy, resolution, repeatability or reproducibility, linearity, etc. Not all manufacturers use the same specifications, which can make it challenging to compare different models of sensors. The following guide explains common sensor specifications and discusses how to use them to find the right sensor for your application.

Isn't Accuracy Most Important?

One of the first specifications you might expect to see is accuracy. Accuracy represents the maximum difference between the measured value and the actual value; the smaller the difference between the measured value and actual value, the greater the accuracy. For example, accuracy of 0.5 mm means that the sensor reading will be within ± 0.5 mm of the actual distance.

However, accuracy is often not the most important value to consider for industrial sensing and measurement applications. Keep reading to see why and to learn the most important specifications to take into consideration based on the type of application.

Key Specifications for Discrete Applications

For discrete laser measurement sensors, Banner provides two key specifications: repeatability and minimum object separation. While these are both useful for comparing products for discrete sensing, minimum object separation will be the most valuable for helping you select a sensor that can perform reliably in a real world application.

Repeatability

Repeatability (or reproducibility) refers to how reliably a sensor can repeat the same measurement in the same conditions. Repeatability of 0.5 mm means that multiple measurements of the same target will be within ± 0.5 mm.

This specification is commonly used among sensor manufacturers and can be a useful point of comparison; however, it is a static measurement that may not represent the sensor’s performance in real world applications.

Repeatability specs are based on detecting a single-color target that does not move. The specification does not factor in variability of the target, including speckle (microscopic changes in target surface) or color/reflectivity transitions that can have a significant impact on sensor performance.

Minimum Object Separation (MOS)

Minimum Object Separation (MOS) refers to the minimum distance a target must be from the background to be reliably detected by a sensor. A minimum object separation of 0.5 mm means that the sensor can detect an object that is at least 0.5 mm away from the background.

Minimum object separation is the most important and valuable specification for discrete applications. This is because MOS captures dynamic repeatability by measuring different points on the same object at the same distance. This gives you a better idea of how the sensor will perform in real world discrete applications with normal target variability.

Importance of MOS in Discrete Applications

In the image to the right, Q4X sensors are being used to identify whether a washer is present in an engine block. Click here to learn more about this application.

If the sensor detects a slight height difference, even as small as 1 mm, it will send a signal to alert operators that a washer is missing or that there are multiple washers present.

The MOS specification is important to determine the smallest change that can be detected.

Key Specifications for Analog Applications

For analog applications, Banner provides resolution and linearity specifications. While resolution is the most common spec used by sensor manufacturers, linearity is the most useful for many applications that require consistent measurements across the range of the sensor.

Resolution

Resolution tells you the smallest change in distance a sensor can detect. A resolution of <0.5 mm means that the sensor can detect changes in distance of 0.5 mm. This spec is the same as best case static repeatability, but it is expressed as an absolute number versus +/-.

The challenge with resolution specs is that they represent a sensor’s resolution in “best case” conditions, so they don’t provide a complete picture of sensor performance in the real world and sometimes overstate sensor performance. In typical applications, resolution is impacted by target conditions, distance to the target, sensor response speed, and other external factors. For example, glossy objects, speckle, and color transitions are all sources of error for triangulation sensors that can affect resolution.

Linearity

Linearity refers to how closely a sensor’s analog output approximates a straight line across the measuring range. The more linear the sensor’s measurements, the more consistent the measurements across the full range of the sensor. Linearity of 0.5 mm means that the greatest variance in measurement across the sensor’s range is ± 0.5 mm.

In other words, linearity is the maximum deviation between an ideal straight-line measurement and the actual measurement. In analog applications, if you can teach the near and far points, the accuracy of the sensor display is less important than how linear the output is. This is because the more linear, the more the output shows the correct change along a line of measurement.

For example, say a target is placed 100 mm away from two sensors, and both sensors are taught at 100 mm and 200 mm. At 100 mm, Sensor A measures 100 mm and Sensor B measures 110 mm. At 200 mm, Sensor A measures 200, and Sensor B measures 210. The target is then moved to 150 mm away from the sensors. Sensor A measures 153 mm, and Sensor B measures 160 mm.

| Actual Distance | Sensor A Display | Sensor B Display |

|---|---|---|

| Actual Distance 100 mm | Sensor A Display 100 mm | Sensor B Display 110 mm |

| Actual Distance 150 mm | Sensor A Display 153 mm | Sensor B Display 160 mm |

| Actual Distance 200 mm | Sensor A Display 200 mm | Sensor B Display 210 mm |

In this case, Sensor A is more accurate because they are closer to the actual distance at each point. But Sensor B is more linear because the sensor readings are more consistent across the range of the sensor.

Importance of Linearity in Analog Applications

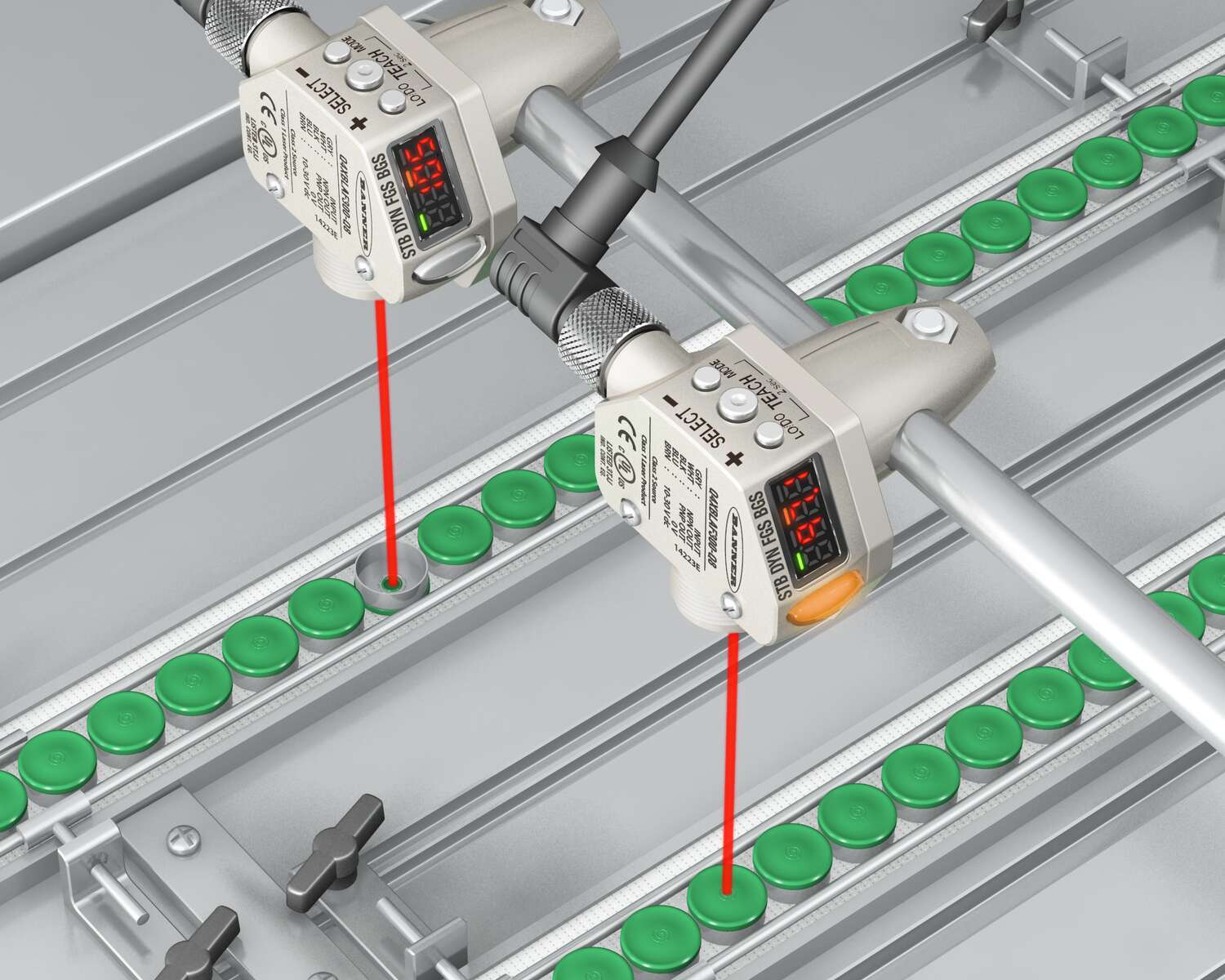

In the image to the right, the two-point teach option on the Q4X analog laser measurement sensor is used to teach a full (4 mA) and empty (20 mA) magazine. The analog output provides a real time gauge of the stack height.

The more linear the sensor is, the better the measurements between a full and empty magazine. With perfect linearity, half of the stack would be gone when the sensor gives 12 mA.

Temperature Effect

Temperature Effect refers to the measurement variation that occurs due to changes in ambient temperatures. A temperature effect of 0.5 mm/ °C means that the measurement value can vary by 0.5 mm for every degree change in ambient temperature.

Total Expected Error

Total Expected Error is the most important specification for analog applications. This is a holistic calculation that estimates the combined effect of factors including linearity, resolution, and temperature effect. Since these factors are independent, they may be combined using the Root-Sum-of-Squares method to calculate the Total Expected Error.

The graphic below is an example of a Total Expected Error calculation for an analog sensor.

The result of these calculations is more valuable than the individual specs because it provides a more complete picture of a sensor’s performance in real world applications.

Banner provides the necessary specs to calculate Total Expected Error in our product datasheets.

Key Specifications for IO-Link Applications

Repeatability, or how reliably the sensor can repeat the same measurement, is a common specification for IO-Link sensors. However, as with discrete applications, repeatability is not the only or most important factor for IO-Link applications.

Accuracy also becomes more important here. As mentioned previously, accuracy is the maximum difference between the actual value and the measured value. When using IO-Link, the measured value (shown on the display) is directly communicated to the PLC. Therefore, it is important that the value be as close to “true” as it can be.

The best-case scenario for an IO-Link application is a sensor that is both accurate and repeatable. However, if the sensor is repeatabile but not accurate, it is still possible for the user to calibrate out the offset via the PLC.

Importance of Accuracy in IO-Link Applications

In this example application pictured to the right, a Q4X laser measurement sensor detects the presence of dark colored inserts on a dark colored automotive door panel. Learn more about this application.

The IO-Link process data shows distance to where the insert should be to determine if the insert is present. Measurement must be accurate regardless of the target color.

Total Expected Error for IO-Link Applications

Total Expected Error is the most important specification for IO-Link applications. For IO-Link sensors, Banner calculates the Total Expected Error a bit differently than for analog applications. For IO-Link sensors, the Total Expected Error represents the combined effect of accuracy, repeatability, and temperature effect. Again, since the factors are independent they can be combined using the Root-Sum-of-Squares method to calculate the Total Expected Error.

See below for an example of how this is calculated for IO-Link sensors.

As with the Total Expected Error for analog applications, the result of these calculations is more valuable for IO-Link applications than the individual specs because it provides a more complete picture of a sensor’s performance in real world applications.

Banner provides the necessary specs to calculate Total Expected Error in our product datasheets.